On Workflows, Flash, Photoshop, and RubyMotion for Games

First, a little background on how I’ve learned to make games.

Phase 1.

I tinkered with some games with Macromedia Director as an undergrad, but I literally remember nothing about Lingo or the Director environment. Whatever I was doing, I was probably doing it wrong. I switched to Processing in grad school and loved it. Processing is very powerful (once you take off the training wheels, it’s really just Java), but bare-bones in terms of a display tree. There’s essentially a single canvas and you issue drawing commands to it. The simplest way to build games with Processing is to just keep your game state in various data structures (arrays for everyone! you get an array, and you get an array, and you get an array…). Every frame you clear the screen and draw everything brand new from your data objects. I ended up with lots of helper functions for each screen, things like “drawTimer,” “drawButtons,” “drawGameBoard.” Everything was vector-based using primitive drawing commands, so tweaking your UI layout meant changing some magic numbers in the code and re-running the app. Cumbersome, but not so bad (there’s only one screen, after all).

Phase 2.

Some time around 2007 or so, I got an alpha invite to Whirled, a Flash-based game/world/thing by Three Rings. I figured I’d better learn Flash so I could make stuff, so I got to work making weird things using pure AS3 and the free flex compiler (student me: “$800 for Flash Builder? Ain’t nobody got time for that!”). My workflow was essentially unchanged from the Processing days (I was still drawing everything in code), but AS3 has a fricking amazing display tree. A DisplayObjectContainer seems like a “no shit” construct in retrospect, but it sped up development a ton. Even so, tweaking anything UI-based still required changing magic numbers and recompiling. I made a couple of games using embedded bitmaps, but even those were placed by code. After the success of Filler, I picked up a copy of Flash (the program) and used it to make the UI for Filler 2. Again, I did everything wrong. Filler 2 has all the different screens on the main timeline, with the main class a document class. I was using Flash (the program) as my compiler instead of the flex compiler, and it was an absolutely terrible workflow–even worse than laying everything out by hand.

Phase 3.

Starting with Color Tangle, I used Flash (the program) the “right” way. Flash (the program) is used to create art, and all the various screens and art pieces are exported in art SWCs. Holy crap-on-a-stick is that faster and more awesome than laying out your games by hand. Instead of focusing your effort on where thing go, almost all of your development time is spent focusing on what things do. This seems like a subtle distinction, but it makes a huge difference. Want to move your HUD from the top to the bottom? Change the position of two text fields? Make a button smaller or larger? No problem! All of your interaction logic accesses things by name (i.e. set the text of “hud.scoreLabel” to be “12345″), without a thought or care to where that thing is on screen.

When people jump through hoops to try to get Flash working on mobile, this is the thing they’re trying to preserve. Don’t get me wrong–I like vector graphics and even prefer them in most situations… but ultimately how the graphics are stored is much less important to me than how I interact with them in code.

So what are some other things about Flash (the program) that makes it so great for game development?

- All of your layout and creation tools are in one program. This saves a ton of time. With a bitmap-based workflow, you typically need to export your bitmaps/textures at all the right resolutions, import them into a separate layout tool, lay them out, and then export that layout. There are lots of tools for this (Spriter for animation, Interface Builder for iOS UIs, etc). Or, worse, take your raw bitmaps and place them via code (which is going to take several iterations of compiling and checking to get right). Basically, every extra step you need to take from asset-creation to running-in-game is going to add workflow delays.

- Symbol-based workflows more closely match UI paradigms. When using raw Photoshop, there is no concept of a re-usable symbol. You can kind of fake it with layers and groups, but it’s a rough approximation. Having the notion of “this is a progress bar” which you can place on multiple screens is great. Edit the symbol and your changes propagate to everywhere that symbol is used. In a bitmap-based workflow, you need to edit every instance. This can be somewhat mitigated by using an external tool to do your layouts (maybe Spriter?), but I don’t know of any custom-built UI layout tools (other than Interface Builder, which I don’t like).

- The text engine in Flash (the program) is the same as in Flash (the runtime). I kind of hate bitmap fonts, mostly because of how much of a pain in the ass it is to do text layouts with any accuracy.

There are probably others, but those are my big four (including the name-based access in code). There’s probably a big market opportunity to for someone to write a symbol-based graphics editor, but that’s more than I’m willing to take on. How am I solving those issues?

After bailing on Adobe AIR for RubyMotion (see my last post for why), I was pretty much stuck with a bitmap-based workflow. All of my UI assets were already implemented in Flash, so I mostly just needed to rasterize them. There are a couple of ways to do that–I’ve been following the development of Flump (also by the Three Rings guys), but it doesn’t support text and UI layouts probably benefit the least from things like texture atlases (how do you atlas a 2048×1536 background?). I decided to write a Photoshop script to emulate a lot of what Flash does with SWCs, along with a helper class in RubyMotion to help load and manipulate the graphics.

On the asset exporter side, I found a script on the web that exported every layer to its own image and modified it to:

- exports metadata for every layer in the photoshop file (depending on naming conventions) — usually just the bounds is enough

- for images, export the full resolution image as an @2x version, then downsize and export the non-@2x version

- if I find a matched pair of “btn something up” and “btn something down”, note in the metadata that this is a button

- if I find a text layer named “text something”, export the font size, color, alignment, and textfield bounds… but not the text

- if a text layer is not named “text …”, rasterize it and export as static text

On the RubyMotion side, I built a GameObjectView class which is essentially an extension UIView with a bunch of helper methods for standard game variables (x,y,rotation,scale) as well as methods to load from metadata. When loading from metadata, the class automatically turns buttons into UIButtons and text fields into UILabels. What used to be a lot of boilerplate asset loading is now basically the same workflow as in AS3. Whereas before I might type something like:

//SomeGameScreen.as

var bg:BackgroundClip = new BackgroundClip();

addChild(bg).

bg.someButton.addEventListener(MouseEvent.CLICKED, mouseHandler);

bg.someLabel.text = “hello world”;

In my GameObjectView, it goes something more like this:

#some_game_screen.rb (actually probably something like some_game_screen_controller.rb)

@bg = GameObjectView.alloc.initWithFrame(view.frame)

@bg.load_from_metadata(“some_game_screen”, self) #self is a tap delegate

@bg.labels["some_label"].text = “hello world”

#later on in code land, our tap delegate

def tap(button)

tap_handler if button.name == “some_button”

end

So, in other words, pretty much my same Flash workflow. It’s not all aces, though–there are a couple of downsides to this workflow. Photoshop has no notion of an empty textfield, so in order to export the bounds of a text field there must be actual text in it… but even then, it exports the bounds of the TEXT and not the text field. The text engines for Flash and iOS are close, but not quite close enough–I find that text gets clipped without a little extra padding (and especially so if your default text is smaller than any dynamic text you shove in later). So I end up “filling” all my text fields in photoshops with ones and lowercase p’s–something like this:

It’s certainly in the “I can live with it” category of annoyance. Another annoyance is that the exporter isn’t as solid as Flash’s publish (obviously). Weird things happen sometimes when layers are in groups and it takes a little while to run. If you interrupt it mid-export, your layers could be in a weird state (say, downsized by 50%) which could cause headaches if you don’t catch them (why is my game suddenly rendering at 1/4 the size it was before!!!!!).

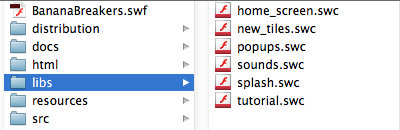

Secondly, this is NOT a performant way to store and load images. Ideally these assets would all be texture packed into nice power-of-two images… but my goal was “fast enough for my purposes” and not “best possible solution.” Because assets get re-used in multiple screens (I have different folders for iPad, iPhone, iPhone4, and for landscape variants of each screen), it’s possible that the same image could be used by different screens or devices but bundled multiple times in different folders. Banana Breakers, for instance, has over 2000 images exported using this method. I use a ruby script as part of my build process to compare the bytes of each image and build a lookup table to match duplicate filenames to the kept copy. For Banana Breakers, this de-duping removes 1200 files (~22 megabytes worth).

Likewise, I have to be at least a little careful about how I create my images. Large empty/alpha spaces between assets get exported just the same as filled in spaces, so it’s a balance between having lots of images to load vs minimizing file size.

To re-use assets and closer approximate a SWC library workflow, I usually put multiple assets into each PSD. The only piece of metadata I have to work with is the layer name, though, so I end up using it for a lot of stuff:

- tagging whether a layer is a button, an image, or a textfield

- tagging whether this is a button’s down state or up state

- tagging what “view” this belongs to — I do this with selectors in my load_from_metadata function, something like {:include => “menu”, :exclude => “content”}. So, say I have a popups PSD that includes pieces for a confirm and an alert. A confirm and an alert are pretty much identical (one has an “ok” button, the other has two buttons for “confirm” and “cancel”). All of the shared pieces end up with names like “btn confirm alert down” and “text confirm alert title”. When creating one, I can do things like

alert = GameObjectView.alloc.initWithFrame(view.frame) alert.load_from_metadata(“popups”, self, {:include => “confirm”})

So how does it do overall?

It solves my two biggest needs — asset-name based workflow and and content/layout in a single program. I don’t think there’s a way to make Photoshop suck less when it comes to symbol-based workflows, but I can live with that. The text parts are not perfect, but work well enough that it’s not a huge slowdown. I can write my game screens with code that is essentially blind to differences between devices, and just have a different image folder for each supported resolution.

A couple of people have asked me why I use native views and not Cocos2D, which now has an excellent ruby motion wrapper in Joybox… mostly I never saw the point! Cocos2D has some great features (easy physics integration, node-based display tree, tweens, sound manager), but for simple games the native display tree is more than adequate. Unless you’re blitting hundreds of bitmaps onscreen, the native views are plenty fast for even fairly complex layouts. A sound manager is trivial to write. CoreAnimation is surprisingly good. So, basically, I didn’t see anything in Cocos2D that I wasn’t already getting with native views. Learning and mastering the native view architecture would make integrating third party code easier (as well as writing non-game apps), and I would never run into any weird “how do I make X work with Cocos2d” issues.